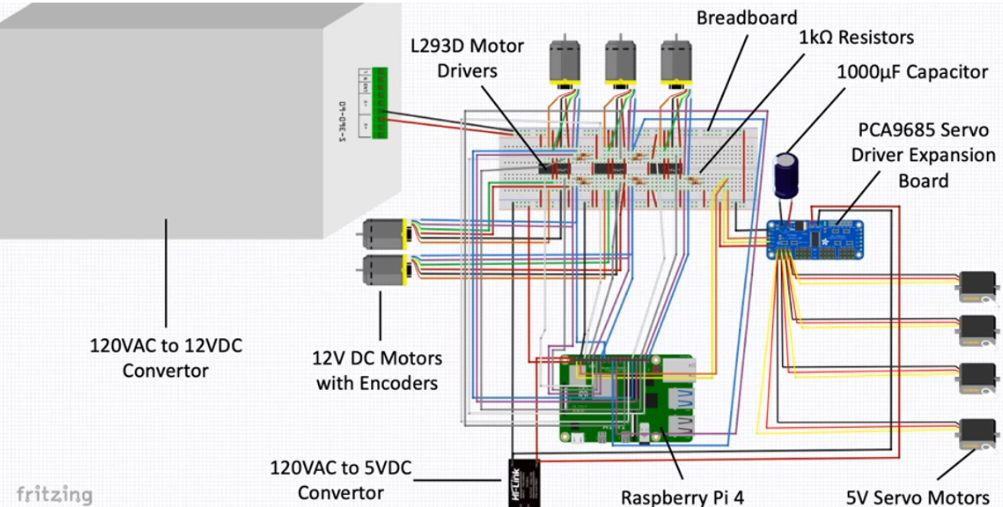

This was a project for a mechatronics course near the end of my undergrad degree in 2020. The goal was to build a robot capable of matching human poses in real time. Results can be seen in the videos below. A desktop computer to the left has a camera mounted to a monitor. A gpu in the desktop is used to run a convolutional neural network that converts a stream of images from the camera to a stream of estimated 3d stick figures, which can be seen on the monitor. Next, the target angles can be computed from the 3d pose. These are then sent over an ethernet cable to a raspberry pi in the robot, which has a basic control loop to match the desired pose.

After the convolutional neural network predicts the 3d stick figure, the target motor angles need to be calculated. The result of this process can be viewed below.

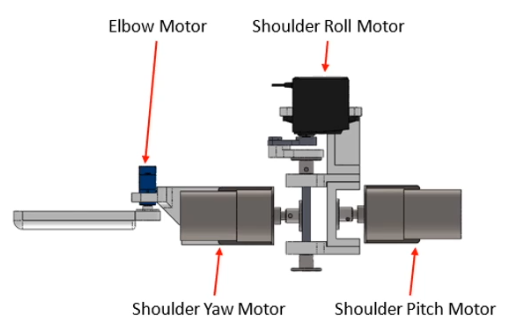

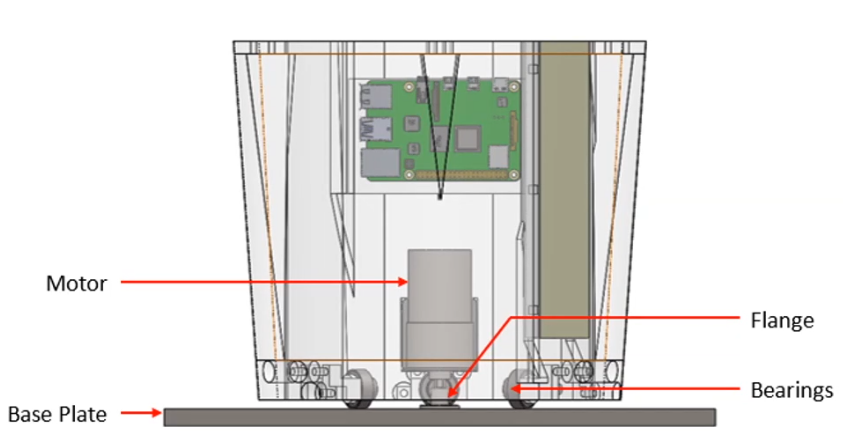

The primary mechanical feature of the robot is the arm movement. The design roughly follows human anatomy and has three degrees of freedom at the shoulder joint and one at the elbow. An additional motor was used at the base of the robot to perform the waist rotations. The electrical design is also included below